While making progress with my rendering engine, one of my goals for this week is to finally implement some kind of frustum culling for the meshes. I could have taken the easier route by only using the pre-built bounding spheres with every 3D model loaded in an XNA program, but I wanted to get tighter-fitting bounding boxes instead. They simply work better for selection and picking, and plus more meshes are culled out of the frustum, which means less false positives and less geometry being rendered off-screen.

Today I have finally finished the first major step, creating the bounding boxes. Simply figuring out how to perfectly create the boxes proved to be a frustrating chore. It wasn’t really the formula to create a box from points that was the difficult part, but getting the correct set of points from each mesh. This required a proper understanding of the GetData method for the VertexBuffer object of each mesh part. I will show you how I obtained the data to create those boxes.

There are so many requests online for wanting to figure out how to correctly build a bounding box for a mesh object, and a lot of those queries are answered with outdated information, or they don’t turn out to be the best case for that particular user. I’ve browsed through several solutions and a few code samples of how to create them, but they were not working for me. Sometimes the program crashes with an OutOfBounds exception, and other times, the boxes are obviously misaligned with the meshes, even after double checking that the correct transformations are in place. But I finally came up with a solution that used a combination of a few approaches to read and add the vertex data.

Building The Box

Bounding boxes are just simple geometric boxes, and be represented with two three-dimensional points, the minimum and maximum coordinates. The distance between those two points is the longest possible diagonal for the box, and the points can be thought of as the upper right corner in the front of the box, and the lower left corner in the back. These boxes are usually created as mesh metadata, during build time or when resources are initialized. It would be very costly to read the vertices and update the bounding boxes on every frame- besides, you should use matrix transformations to do that. Here is how we would usually initialize a mesh model:

public MeshModel(String modelPath)

{

/* Load your model from a file here */

// Set up model data

boundingBoxes = new List<BoundingBox>();

Matrix[] transforms = new Matrix[model.Bones.Count];

model.CopyAbsoluteBoneTransformsTo(transforms);

foreach (ModelMesh mesh in model.Meshes)

{

Matrix meshTransform = transforms[mesh.ParentBone.Index];

boundingBoxes.Add(BuildBoundingBox(mesh, meshTransform));

}

}

This would typically go in the constructor or initialization method of the class used to keep your model object, and all of its related data. In this case, we have a List of BoundingBox objects, used to keep track of all the upper and lower bounds for all meshes the model might have. Possible uses may be to do basic picking and collision testing, and debugging those tests by drawing wireframe boxes on the screen (which I will cover further in this article).

You may have noticed the BuildBoundingBox method in adding to the BoundingBox list. This is where we will create an accurate, tight-fitting box for every mesh, and to do this we will need to count all the vertex data for all its mesh parts. It requires a ModelMesh object and a Matrix object which is the bone transformation for that particular mesh.

This method will start out by looping through all the mesh parts to keep track of the maximum and minimum vertices found in the mesh so far, and returns the smallest possible bounding box that contains those vertices:

private BoundingBox BuildBoundingBox(ModelMesh mesh, Matrix meshTransform)

{

// Create initial variables to hold min and max xyz values for the mesh

Vector3 meshMax = new Vector3(float.MinValue);

Vector3 meshMin = new Vector3(float.MaxValue);

foreach (ModelMeshPart part in mesh.MeshParts)

{

// The stride is how big, in bytes, one vertex is in the vertex buffer

// We have to use this as we do not know the make up of the vertex

int stride = part.VertexBuffer.VertexDeclaration.VertexStride;

VertexPositionNormalTexture[] vertexData = new VertexPositionNormalTexture[part.NumVertices];

part.VertexBuffer.GetData(part.VertexOffset * stride, vertexData, 0, part.NumVertices, stride);

// Find minimum and maximum xyz values for this mesh part

Vector3 vertPosition = new Vector3();

for (int i = 0; i < vertexData.Length; i++)

{

vertPosition = vertexData[i].Position;

// update our values from this vertex

meshMin = Vector3.Min(meshMin, vertPosition);

meshMax = Vector3.Max(meshMax, vertPosition);

}

}

// transform by mesh bone matrix

meshMin = Vector3.Transform(meshMin, meshTransform);

meshMax = Vector3.Transform(meshMax, meshTransform);

// Create the bounding box

BoundingBox box = new BoundingBox(meshMin, meshMax);

return box;

}

A lot of important stuff just happened here. First is the setting up of vertexData, which is an array of VertexPositionNormalTexture structures. This is one of several built-in vertex structures that can be used to classify and organize vertex data. In particular, I used this one because my vertex buffer contains position, normal and texture coordinates up front, and no color data. It will help us determine where our position data is located, which is the only data needed to create our box.

However, this is not enough to assess the alignment and structure of the vertex buffer. We also need to know the vertex stride, which is simply the number of bytes that each vertex element contains. This number will vary depending on how your meshes are created and what data was imported, and it can even vary with each vertex buffer. With this piece of info, stepping through the vertex buffer should now be straightforward, with the vertex stride ensuring that we get accurate data. The vertexData gets sent to an inner loop where we simply examine each vertex, seeing if we have found a new minimum or maximum position. By default the minimum and maximum are set to extreme opposite values.

After the loop is done, we now have the only two vertex points that matter, and these are transformed by the mesh’s parent bone matrix. Finally, a new bounding box is created and returned from these two points. Optionally you can also choose to create a custom bounding sphere from the bounding box.

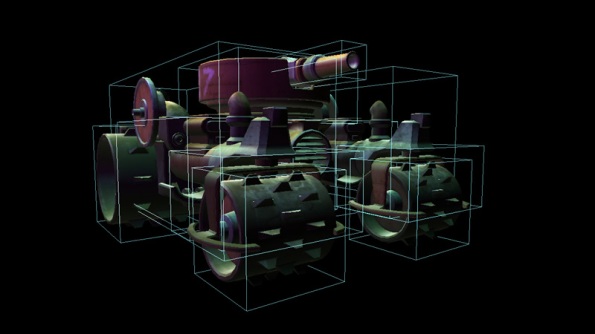

Drawing the boxes for debugging

Now with our boxes stored in place, let’s put them to some use. We are going to draw the bounding boxes that correspond to the meshes for each model. If they are drawn together with the model, the wireframes should hide behind solid objects.

Every BoundingBox has a Vector3 array which represent the eight corners of the box. The first four corners are of the front side, and the last four corners are the back. We are going to use a line list to draw the 12 edges representing the box. Each line connects a pair of corners, and the following array will form the edges:

// Initialize an array of indices for the box. 12 lines require 24 indices

short[] bBoxIndices = {

0, 1, 1, 2, 2, 3, 3, 0, // Front edges

4, 5, 5, 6, 6, 7, 7, 4, // Back edges

0, 4, 1, 5, 2, 6, 3, 7 // Side edges connecting front and back

};

Now in the drawing loop, we will loop through the bounding boxes for the model, set the vertices and draw a LineList for those using any desired effect. This example uses a BasicEffect called boxEffect.

// Use inside a drawing loop

foreach (BoundingBox box in boundingBoxes)

{

Vector3[] corners = box.GetCorners();

VertexPositionColor[] primitiveList = new VertexPositionColor[corners.Length];

// Assign the 8 box vertices

for (int i = 0; i < corners.Length; i++)

{

primitiveList[i] = new VertexPositionColor(corners[i], Color.White);

}

/* Set your own effect parameters here */

boxEffect.World = Matrix.Identity;

boxEffect.View = View;

boxEffect.Projection = Projection;

boxEffect.TextureEnabled = false;

// Draw the box with a LineList

foreach (EffectPass pass in boxEffect.CurrentTechnique.Passes)

{

pass.Apply();

GraphicsDevice.DrawUserIndexedPrimitives(

PrimitiveType.LineList, primitiveList, 0, 8,

bBoxIndices, 0, 12);

}

}

In practice, you should make sure that if you transformed the scale, position, or rotation of your model, to apply the same transformations to all the boxes as well. Also, remember that it is best to move any shader parameters that won’t change outside of the drawing loop, and set them only once.

That’s all there is to it. This should render solid colored wireframe boxes, not simply around your models, but in all the meshes they contain.